Amazon Nova In Action - Micro, Lite and Pro

Estimated Reading Time: 15 minutes

Last Updated: 2025-01-24

Disclaimer: The details described in this post are the results of my own research and investigations. While some effort has been expended in ensuring their accuracy - with ubiquitous references to source material - I cannot guarantee that accuracy. The views and opinions expressed on this blog are my own and do not necessarily reflect the views of any organization I am associated with, past or present.

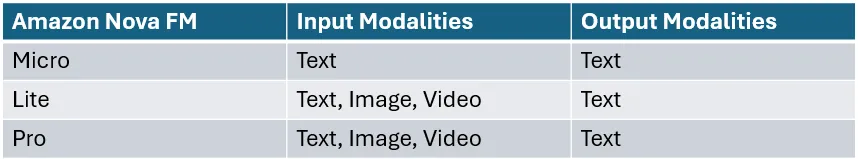

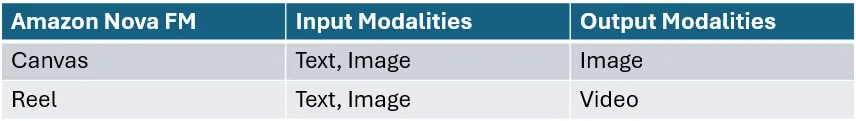

One of the more significant events at Re:Invent 2024 was the announcement of Amazon Nova, a suite of multi-modal, “state-of-the-art foundation models”[1] with deep integration in Amazon Bedrock. In one respect, until Nova’s release, Bedrock was similar to Hugging Face as a store of third-party foundational models (putting Amazon Titan to one side) that companies could use within their workflows, albeit with advanced functionality - Knowledge Bases, Agents and Guardrails to extend the capabilities of the AI. However, the introduction of Amazon Nova signals AWS’s intention to tussle with the likes of Anthropic and OpenAI directly, offering both “Understanding” models[1] in the form of:

and “Creative Content Generation” models in the form of:

In this post, we will see how a company can leverage Amazon Nova foundation models to analyze data and extend their workflows via the Amazon Bedrock Invoke API.

Amazon Nova In Action

Let me present a hypothetical scenario to you.

A fictional company called Cardboard & Beyond has one product: a cardboard box.

With every sale, the customer is invited to give feedback to the company in one or more of the following forms:

- A written message

- An image

- A video

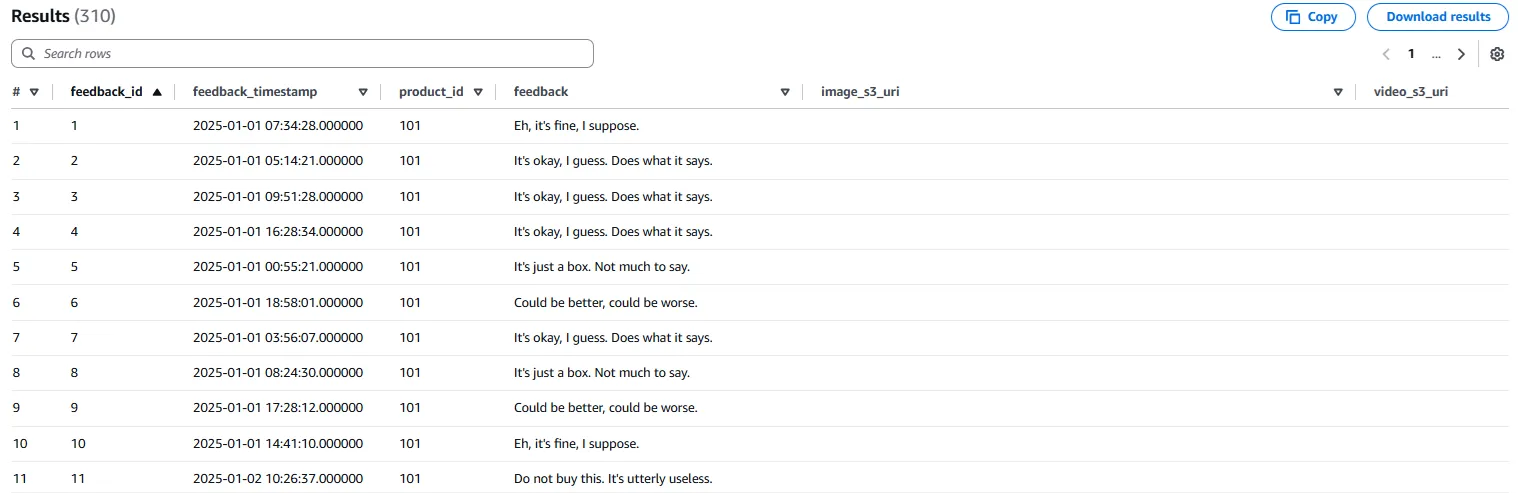

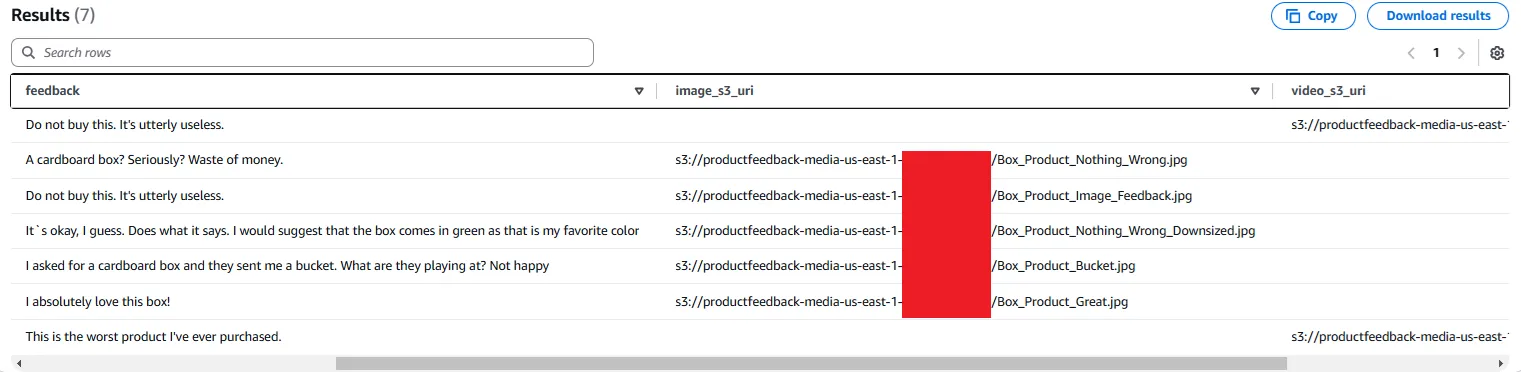

We have been tasked with deriving insights from the feedback using foundation models from Amazon Nova, however best we see fit. Feedback data are delivered to the company on a daily basis into an Athena Iceberg table like this:

There are 10 rows of data per day. Each row has written feedback but some feedback contains an S3 URI to a customer-provided image or to a customer-provided video.

Data and media are stored in two S3 buckets:

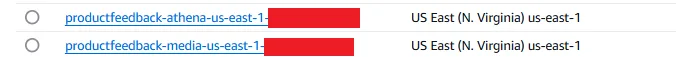

The company request for insights is rather open-ended. After some thought, we come up with the idea to test all of the available Nova foundation models, using each to perform a particular task.

In essence, we will leverage the documented capabilities of the different Nova FMs to identify feedback sentiment, understand review media, generate new concepts and even produce a visual representation of how customers like or dislike our product on a given day.

We will start with Nova Micro.

Micro

Setup

Amazon Nova Micro is the cheapest model at $0.000035 per 1000 input tokens and $0.00014 per 1000 output tokens. It is a text-to-text model with very low latency, which AWS suggests can be used to generate product descriptions and execute similar tasks[2].

Perfect. This is well-suited to our task. We want to summarize our customer feedback for each day and to classify the overall daily sentiment of that feedback as positive, neutral or negative.

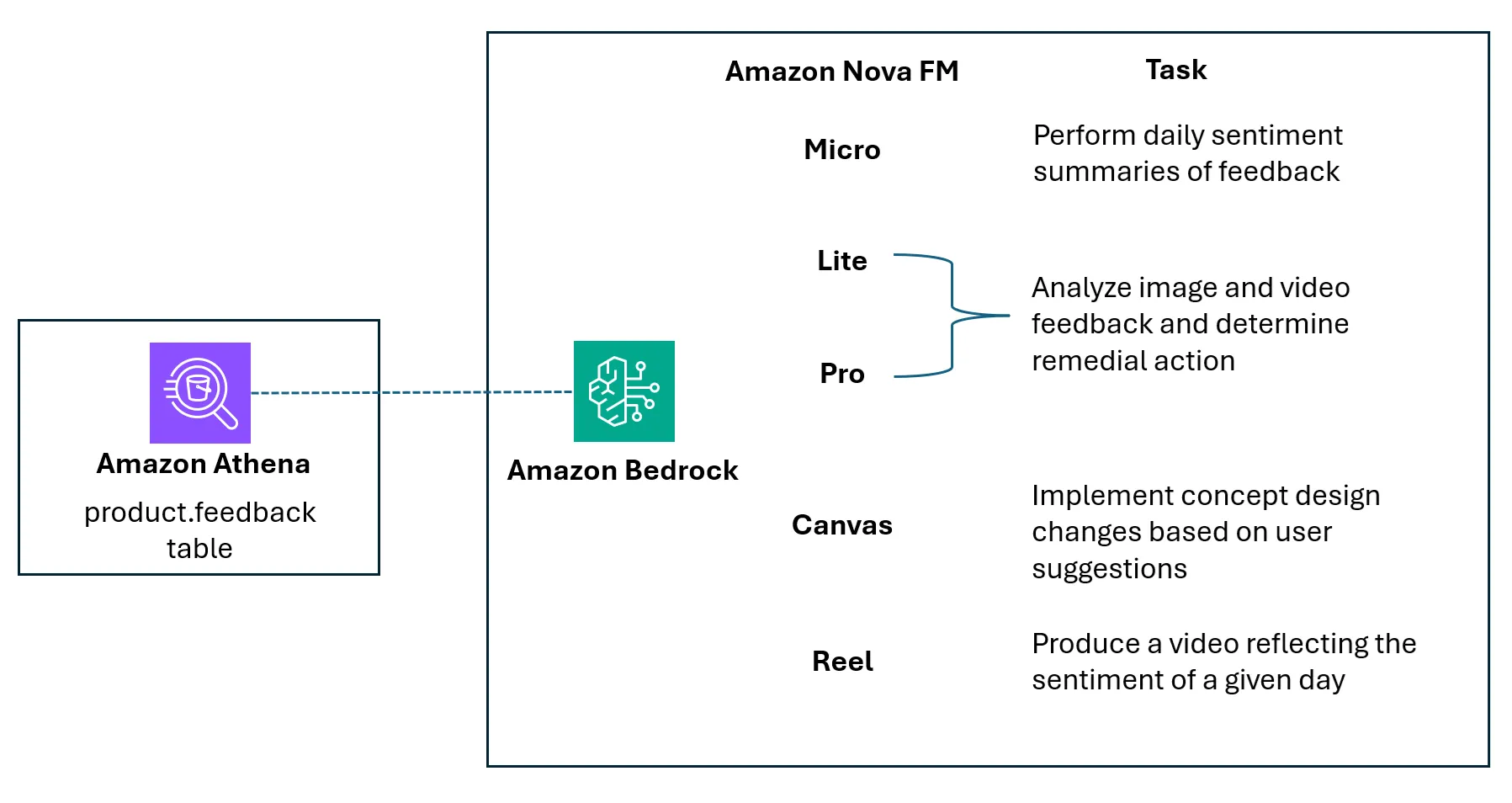

This is a general plan for implementing this:

Throughout this post, we will only provide the call to Bedrock for each model; the data preparation will be left for another day.

# Define the model ID for Nova Micro

MODEL_ID = 'us.amazon.nova-micro-v1:0'

# Prepare the request payload

payload = {

"system": [

{

"text": "You are a feedback summarizer for a product. When the user provides you with a list of feedback messages, you should summarize all messages in one line, and indicate 'positive','negative', or 'neutral'. Provide the result in the form { \"summary\": \"<summary>\", \"sentiment\": \"<sentiment>\"}"

}

],

"schemaVersion": "messages-v1",

"messages": [

{

"role": "user",

"content": [

{

"text": f"Here is a list of feedback messages for a product on {date}:\n{feedback_list}"

}

]

}

],

"inferenceConfig": {

"max_new_tokens": 300,

"top_p": 0.1,

"top_k": 20,

"temperature": 0.3

}

}

# Invoke the model

response = bedrock_runtime_client.invoke_model(

modelId=MODEL_ID,

body=json.dumps(payload)

)

In the above block, we have a system text block that tells Micro how to behave[3]. This establishes the general tasks for the model:

- Given a list of feedback, summarize them

- Categorize the summaries into positive, neutral and negative

- Output the results in JSON form

The user text provides the actual feedback messages for the given date.

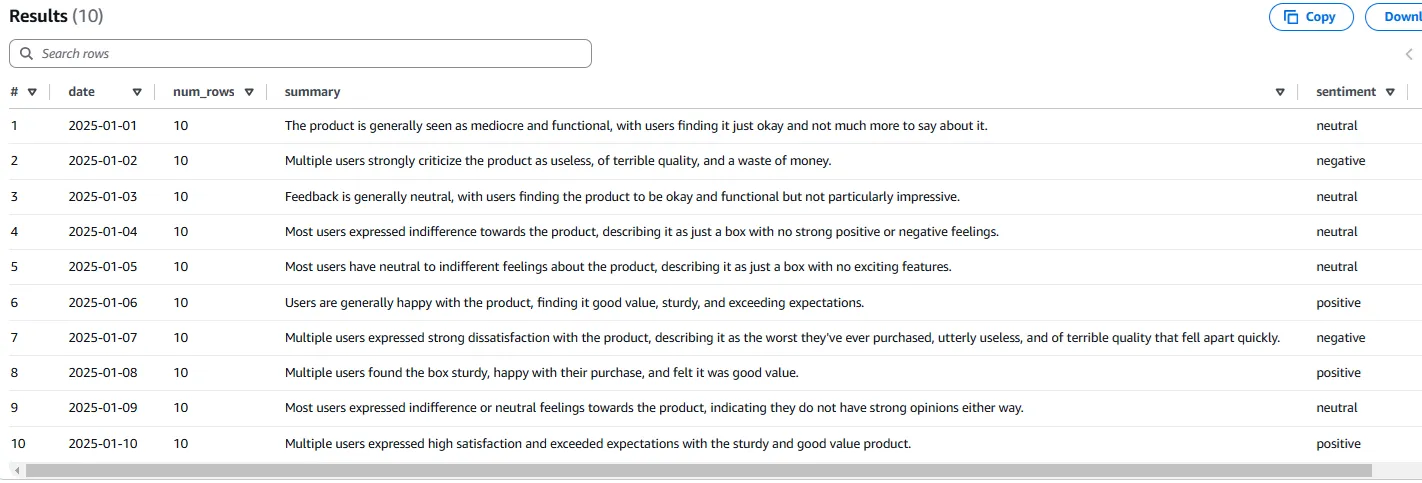

Executing the Micro model for each day of feedback produces JSON responses. These are then written to a Parquet file in S3 and crawled for querying in Athena.

Results

The results from the Micro model were actually quite impressive:

The model has successfully provided a summary of the different feedback on each day, as well as giving an accurate sentiment. Neat.

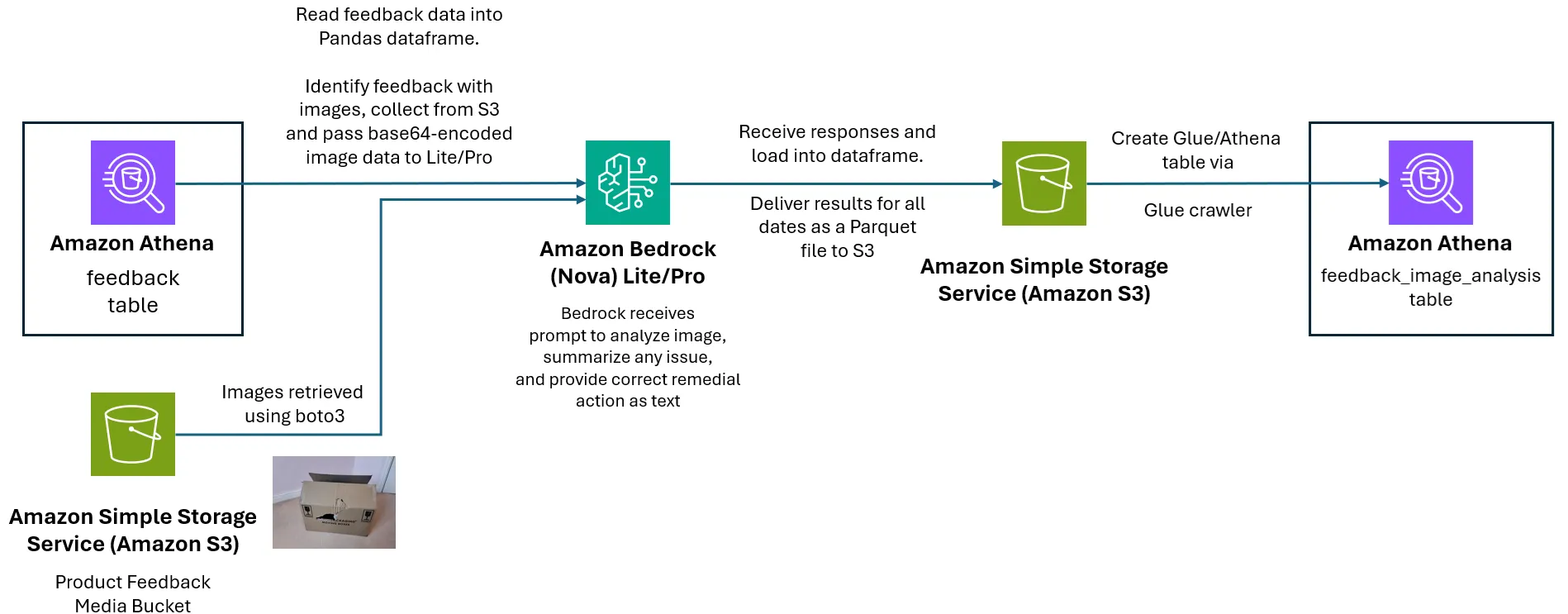

Lite/Pro

Setup

The Nova Lite and Nova Pro are text, image and video to text models. Both still quite cheap at $0.00006 (Lite)/$0.0008 (Pro) per 1000 input tokens and $0.00024 (Lite)/$0.0032 (Pro) per 1000 output tokens.

Our original feedback data contained some rows with images and video feedback from customers. We will use these models to:

- Summarize any issues that can be seen in a provided image

- Summarize any issues that can be seen in a provided video

- Identify a remedial action to take in each case - one of: Issue Refund, No Action, Contact Customer

Aside: A remedial action is a great starting point for implementing Bedrock Agents. For example, if the assigned remedial action is ‘Issue Refund’, a Bedrock Agent could trigger an action to send an automated refund - of course, blindly doing this without additional checks is very risky! Alternatively, ‘Contact Customer’ could schedule a call with the Customer Service team.

The feedback data itself contains S3 URI references to image and video objects in the media bucket. When calling Nova Lite and Nova Pro via Bedrock, images need to be converted into bytes and passed as part of the payload to Bedrock in a base64-encoded string. Videos can be passed in the same way, but a second option is to provide a URI to the video object in S3[4].

The implementation plan is similar to our Micro experiment:

Image Analysis

So, what images will be tested? I took several photos to cover a few different scenarios that our imaginary customers might have experienced.

Let’s break this down:

Scenario 1

The customer has been provided with a damaged box. Their written feedback is: “Do not buy this. It’s utterly useless.”.

A glowing review.

Note: The image above has had some branding marks blurred. The blurred image was used in the image analysis.

Scenario 2

The customer has been provided with a fully-functioning box. They’ve written the word ‘Books’ on it. Their written feedback is: “A cardboard box? Seriously? Waste of money.”

They seem to be angry despite receiving what they paid for.

Scenario 3

The customer has been provided with a fully-functioning box. They’ve written the word ‘Books’ on it and they seem to be happy with their purchase. Their written feedback is: “I absolutely love this box!“.

Whatever floats their boat.

Scenario 4

The customer has been provided with a bucket instead of a cardboard box. Their written feedback is: “I asked for a cardboard box and they sent me a bucket. What are they playing at? Not happy”.

Cardboard & Beyond only sell cardboard boxes - the ‘Beyond’ is just marketing.

Here is the code I used to execute both Nova Lite and Nova Pro:

# Define the model ID for Nova Lite/Pro

#MODEL_ID = 'us.amazon.nova-lite-v1:0'

MODEL_ID = 'us.amazon.nova-pro-v1:0'

remedial_action_list = ["Issue Refund", "No Action", "Contact Customer"]

# Prepare the request payload

payload = {

"system": [

{

"text": "Act as an image-analysis assistant. When the user provides you with an image of a product and the name of the product, you must analyse and summarize what, if anything, is wrong with the product using the image and the user feedback. Then, you should identify the correct remedial action from the following list: %s. Provide the result in the form { \"image_analysis\": \"<image_analysis>\", \"remedial_action\": \"<remedial_action>\" }" % str(remedial_action_list)

}

],

"schemaVersion": "messages-v1",

"messages": [

{

"role": "user",

"content": [

{

"text": f"Here is an image of the faulty product. The product is a cardboard box. The user feedback is {user_feedback}"

},

{

"image": {

"format": "jpg",

"source": {

"bytes": image_body

}

}

}

]

}

],

"inferenceConfig": {

"max_new_tokens": 300,

"top_p": 0.1,

"top_k": 20,

"temperature": 0.3

}

}

# Invoke the model

response = bedrock_runtime_client.invoke_model(

modelId=MODEL_ID,

body=json.dumps(payload)

)

Here, the system text asks the model to analyze the images and detect issues with the product, if any exist. With the system text and user feedback together, the model is also asked to provide a remedial action as a result of its understanding of the feedback, taken from a prescribed list of: Issue Refund, No Action, Contact Customer.

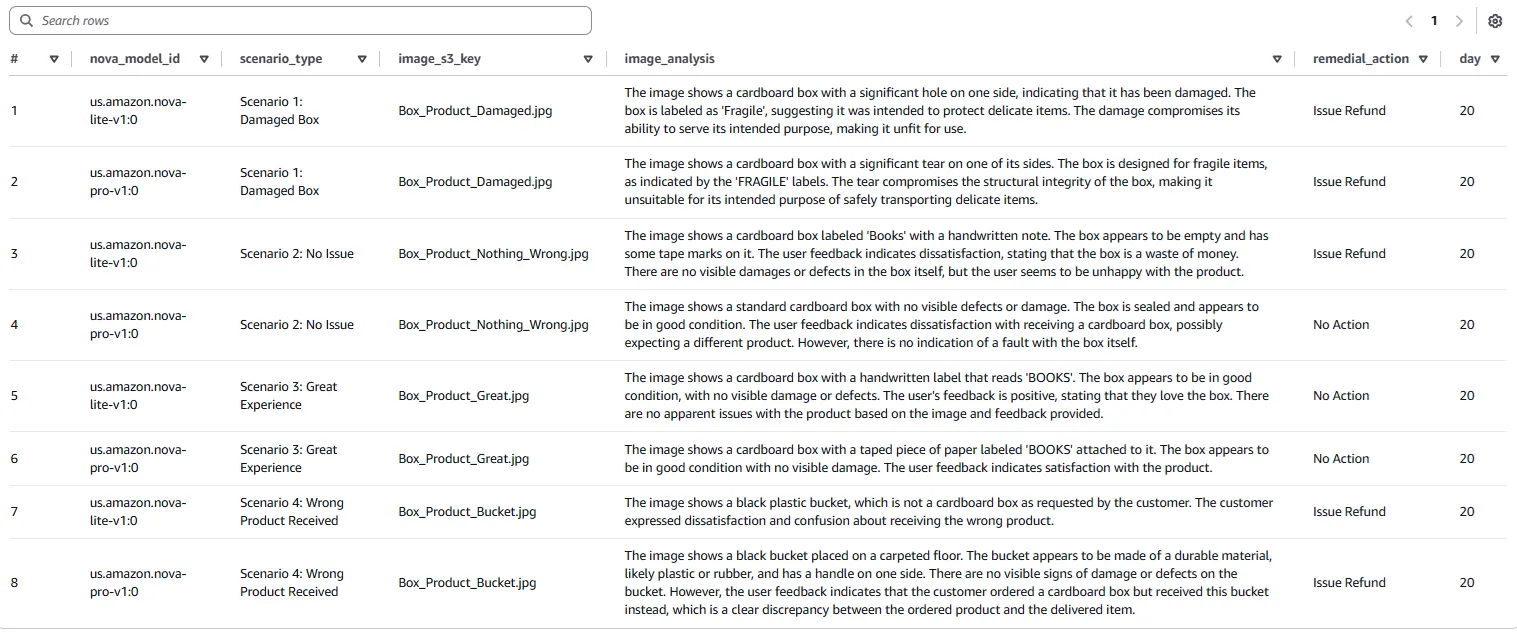

Image Analysis - Results

The image analysis has clearly been a success for both models, with each correctly identifying the contents of each image and applying the context of the user feedback to its response. In each case, the FMs have identified a remedial action of Issue Refund or No Action from its interpretation of the situation.

If we were to consider each scenario we might, as humans, have decided on the following actions:

- Scenario 1: Damaged Box -> Issue Refund

- Scenario 2: No Issue -> No Action

- Scenario 3: Great Experience -> No Action

- Scenario 4: Wrong product received -> Issue Refund

In general, both Nova Lite and Pro have correctly decided the remedial action to take, including opting to issue a refund when the customer received a bucket. An exception to this though is Scenario 2, in which Lite decides to initiate Issue Refund because of the user’s dissatisfaction, but Pro takes No Action. Does this mean that Pro understood that the good condition of the box meant that no refund was technically warranted? I remain unconvinced, especially at this small sample size (N=4). In any case, had we provided in the system text more guidance on Cardboard & Beyond’s predisposition towards refunds, we might expect different results.

Indeed, this is where fine tuning could come in. By training one of the Nova models through Bedrock on the images, text and remedial actions of previous customer feedback, that model would start to behave as expected, allowing more trust over its automated decisions.

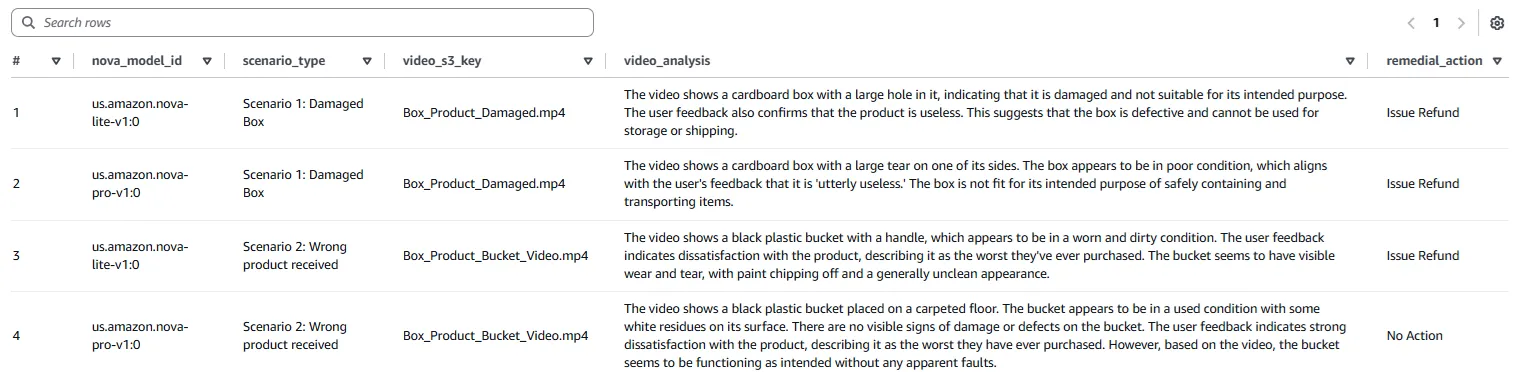

Video Analysis

Let’s now try out Nova Lite and Pro with videos.

Here, we have two of the scenarios we saw in the Image Analysis section. Originally, these had audio with them in which I, the customer, would air my grievances. However, as these models cannot process audio[5], and as frankly no one needs to be subjected to my bad acting, I have removed the audio track.

Note: Some branding marks have been blurred from the first video; the analysis was conducted on the blurred video.

Just two scenarios here:

Scenario 1 - Damaged Box

The video depicts the customer showing the extent of the damage to the box. Their written feedback was ‘Do not buy this. It’s utterly useless’.

Harsh words, today!

Scenario 2 - Wrong Product Received

The video shows a fairly static video of a bucket. The customer’s written feedback was ‘This is the worst product I’ve ever purchased’.

The hyperbole might be warranted in this case.

In Scenario 2, the written feedback does not complement the specific complaint of receiving the wrong product, so it will be interesting to see what Nova Lite/Pro come up with.

The Bedrock Invoke API call is only slightly different to the Image Analysis call:

# Define the model ID for Nova Lite/Pro

#MODEL_ID = 'us.amazon.nova-lite-v1:0'

MODEL_ID = 'us.amazon.nova-pro-v1:0'

remedial_action_list = ["Issue Refund", "No Action", "Contact Customer"]

# Prepare the request payload

payload = {

"system": [

{

"text": "Act as an video-analysis assistant. When the user provides you with a video of a product and the name of the product, you must analyse and summarize what, if anything, is wrong with the product using the video and the user feedback. Then, you should identify the correct remedial action from the following list: %s. Provide the result in the form { \"video_analysis\": \"<video_analysis>\", \"remedial_action\": \"<remedial_action>\" }" % str(remedial_action_list)

}

],

"schemaVersion": "messages-v1",

"messages": [

{

"role": "user",

"content": [

{

"text": f"Here is an video of the faulty product. The product is a cardboard box. The user feedback is {user_feedback}"

},

{

"video": {

"format": "mp4",

"source": {

"s3Location": {

"uri": video_s3_uri

}

}

}

}

]

}

],

"inferenceConfig": {

"max_new_tokens": 300,

"top_p": 0.1,

"top_k": 20,

"temperature": 0.3

}

}

# Invoke the model

response = bedrock_runtime_client.invoke_model(

modelId=MODEL_ID,

body=json.dumps(payload)

)

Much like for the image analysis, the system text asks the model to act as a video-analysis assistant in order to summarize issues with the product, providing a remedial action of either Issue Refund, No Action or Contact Customer. In the user text, along with the written feedback, the model is told that the product is a cardboard box. This time we are able to send an S3 location for the videos rather than sending bytes.

Video Analysis - Results

On the positive side, both Nova Lite and Pro have successfully identified both the cardboard box and the bucket, including the damage to the box in Scenario 1. Combined with the user feedback, both models identified the remedial action of Issue Refund correctly.

Unfortunately the analysis of Scenario 2 is rather superficial. Yes, it identified that there was a bucket and that it was a bit dirty - I’ve been painting a wall - but it took only the visual cues to determine a remedial action. A refund should have been issued in Scenario 2, but as the bucket was “functioning as intended”, Nova Pro decided No Action was required.

This is interesting, as we specified in the user text that the product was a cardboard box. On the other hand, perhaps both models weighed their responses based on the written feedback, which said nothing in this case about it being the wrong product.

It is likely that the prompt lacked clarity. Various prompt engineering techniques such as few-shot prompting may have yielded a better result, where examples of video and written feedback are provided to a model with the expected output to calibrate it.

Conclusion

In this post, we have explored the use of the “Understanding” models of Amazon Nova Micro, Lite and Pro within the context of a business workflow. The responses are promising, with all of the models performing well for the specific summarization and analysis tasks set. Of course, some of the answers given by Lite and Pro were naive, but equally it is unfair to expect that a general foundation model should understand the full context of its task without sufficient fine tuning and prompting.

We have not yet taken the opportunity to benchmark these three models against those from Anthropic or OpenAI, but nevertheless there’s clearly a lot of potential to use these models for subjective analysis and automated decisions. The Nova suite will, I’m sure, keep pace with the advancements of competitors, and it will be exciting to see what is possible in the future.

What’s Next?

As we mentioned earlier, Amazon Nova also provides Creative Content Generation foundation models, with the ability to generate images and videos from text and sample images. If you’d like to read about extending our fictional workflow to these, make sure to check out this post: Amazon Nova In Action - Canvas and Reel.

References

[1] - Amazon Web Services. What is Amazon Nova?. https://docs.aws.amazon.com/nova/latest/userguide/what-is-nova.html

[2] - Amazon Web Services. Amazon Nova Micro, Lite, and Pro - AWS AI Service Cards. https://docs.aws.amazon.com/ai/responsible-ai/nova-micro-lite-pro/overview.html

[3] - Amazon Web Services. Text understanding prompting best practices. https://docs.aws.amazon.com/nova/latest/userguide/prompting-text-understanding.html

[4] - Amazon Web Services. Complete request schema. https://docs.aws.amazon.com/nova/latest/userguide/complete-request-schema.html

[5] - Amazon Web Services. Video understanding limitations. https://docs.aws.amazon.com/nova/latest/userguide/prompting-vision-limitations.html