Amazon S3 Tables - What is an S3 Table?

Estimated Reading Time: 10 minutes

Last Updated: 2025-01-01

Disclaimer: The details described in this post are the results of my own research and investigations. While some effort has been expended in ensuring their accuracy - with ubiquitous references to source material - I cannot guarantee that accuracy. The views and opinions expressed on this blog are my own and do not necessarily reflect the views of any organization I am associated with, past or present.

Over the past few years, many companies have adopted the Data Lakehouse paradigm via table formats like Apache Iceberg, Apache Hudi and Delta Lake. AWS has not been idle during this adoption: organizations can now exercise CRUD functionality on their S3 data lakes through query engines via deep integration of these table formats with the Glue Data Catalog.

Throughout 2024, AWS has continued to add to its arsenal of supportive features for this area, with automated compaction and storage optimizations released at the beginning of Q4 2024[1]. While these features indicated the general direction undertaken by AWS, the announcement of S3 Tables at Re:Invent 2024 shows how sharply AWS is now focused on data lakehousing.

What are S3 Tables?

An S3 Table is an AWS-managed Apache Iceberg table, with “purpose-built S3 storage for storing structured data in Apache Parquet format”[2].

In other words, AWS has created a new type of S3 bucket that has been optimized specifically for:

- Storing the data and metadata of an Apache Iceberg table and;

- Enabling faster read/write integration with query engines over traditional S3 storage

AWS also maintains S3 Tables automatically through the following Iceberg operations[3]:

- Compaction (rewriting many small files into fewer, larger files for better read performance)[8]

- Snapshot management (deletion of expired snapshots)[8]

- Unreferenced file removal (deletion of objects not referenced by any snapshots)[9]

In theory then, S3 Tables can save long-suffering data engineers from the burden of periodic maintenance jobs, and organizations through improved query performance.

Naturally, AWS has also considered how these tables will be integrated with other AWS analytics services. Through an extension of the Glue Data Catalog with AWS Lake Formation, the following services can query the data from an S3 Table[3]:

- Amazon Athena

- AWS Glue

- Amazon EMR

- Amazon Redshift

- Amazon QuickSight

- Apache Spark[4]

You can also write data to an S3 Table using Amazon Data Firehose[5].

Catalogs, S3 Table Buckets, Namespaces and S3 Tables

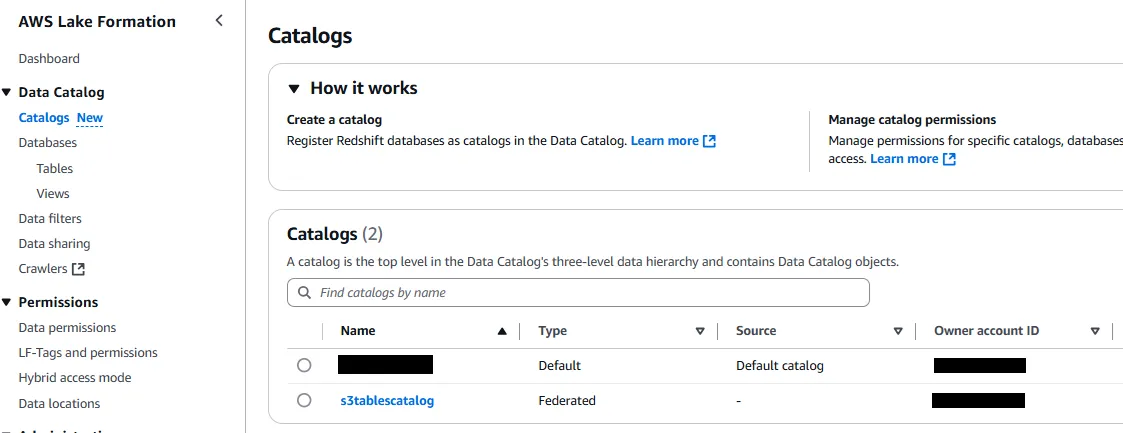

AWS has extended the Glue Data Catalog and AWS Lake Formation to accept new account-level catalogs. Previously, there was just one account-level catalog (the default catalog, with the AWS Account ID as the name), but in the creation of an S3 Table a new, ‘federated’ catalog called s3tablescatalog is created at the account level.

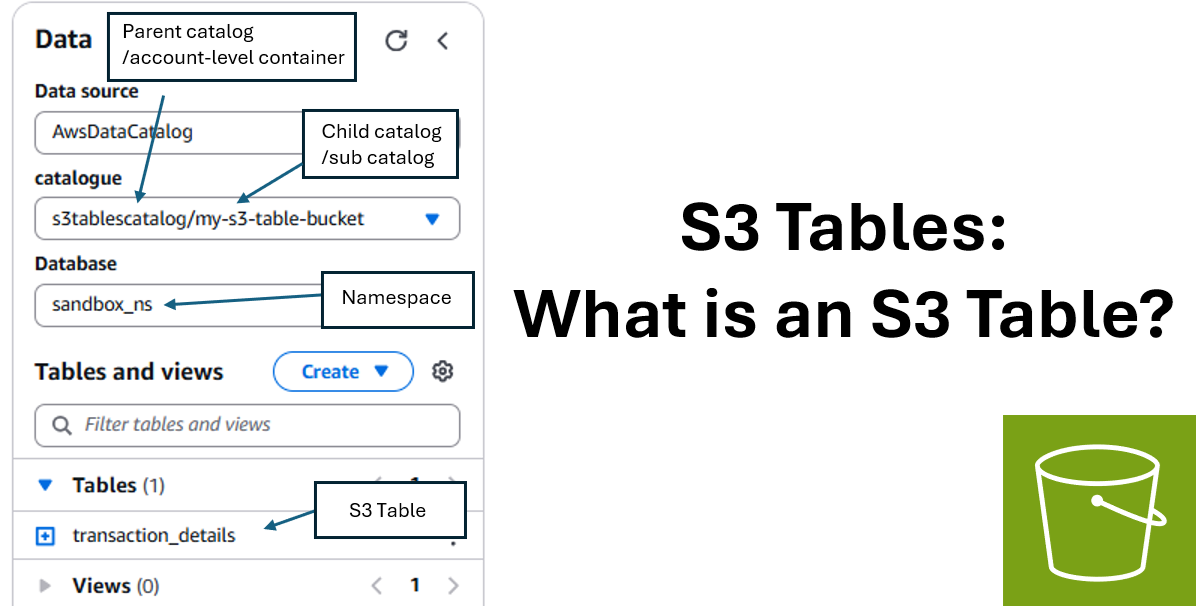

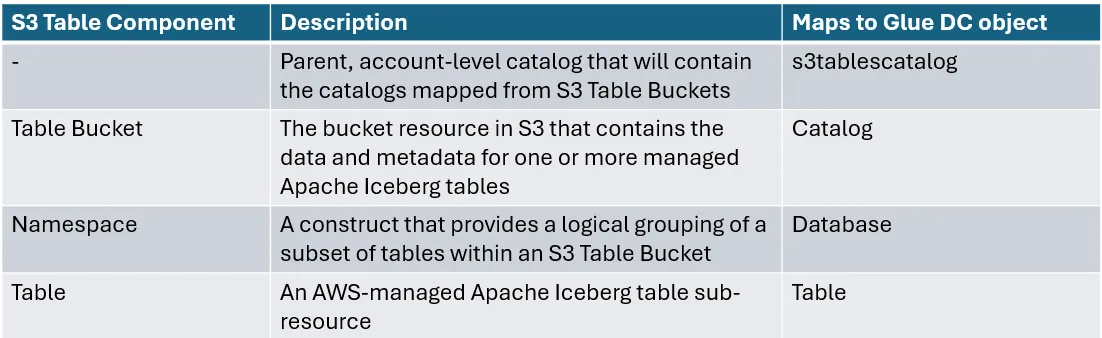

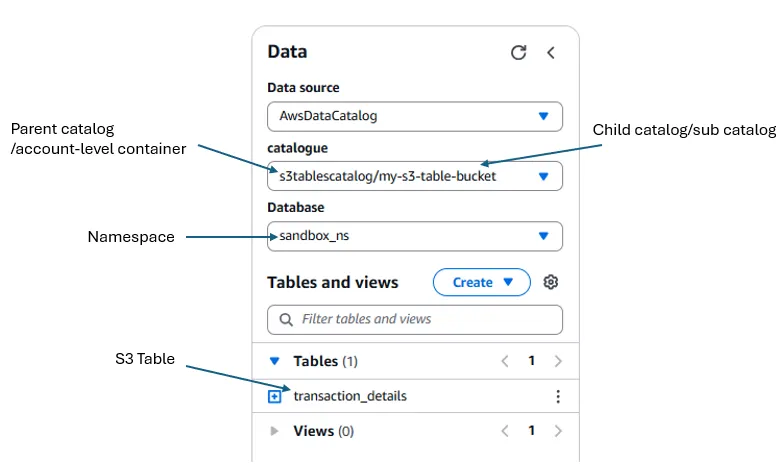

S3 Tables are organized under a hierarchy of constructs and resources which map to Glue Data Catalog objects, which you can view here.

However, generally, we have[6][7]:

If you follow the instructions in the next post or the tutorial from AWS here to create an S3 Table, you will be able to see this mapping within Amazon Athena itself:

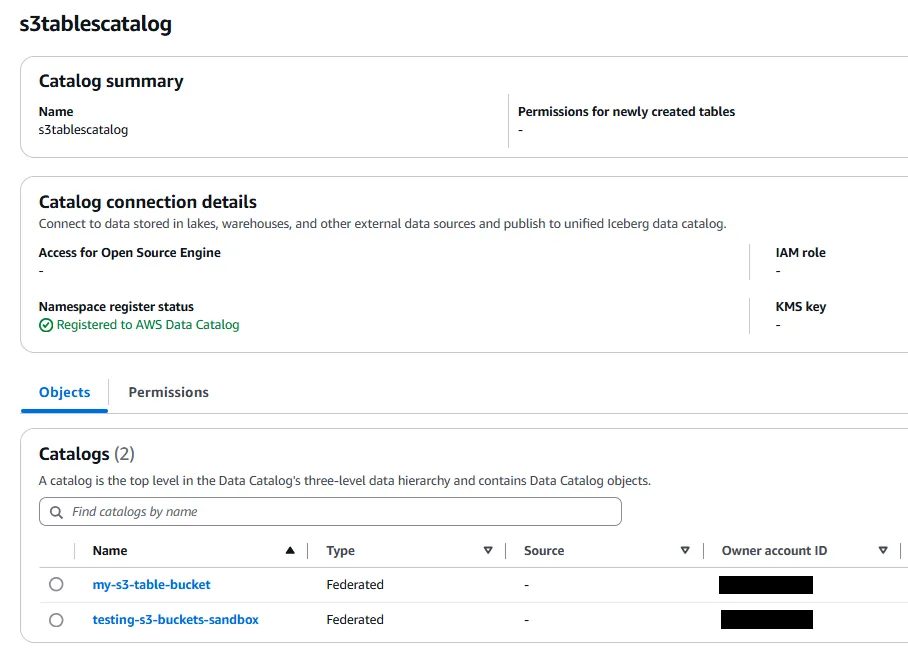

The s3tablescatalog we saw in AWS Lake Formation is only created when you enable the integration of S3 Tables with AWS Analytics services. When you click into this, we can see that new sub-catalogs are indeed created for each S3 Table Bucket (here, my-s3-table-bucket and testing-s3-buckets-sandbox are my S3 Table Buckets):

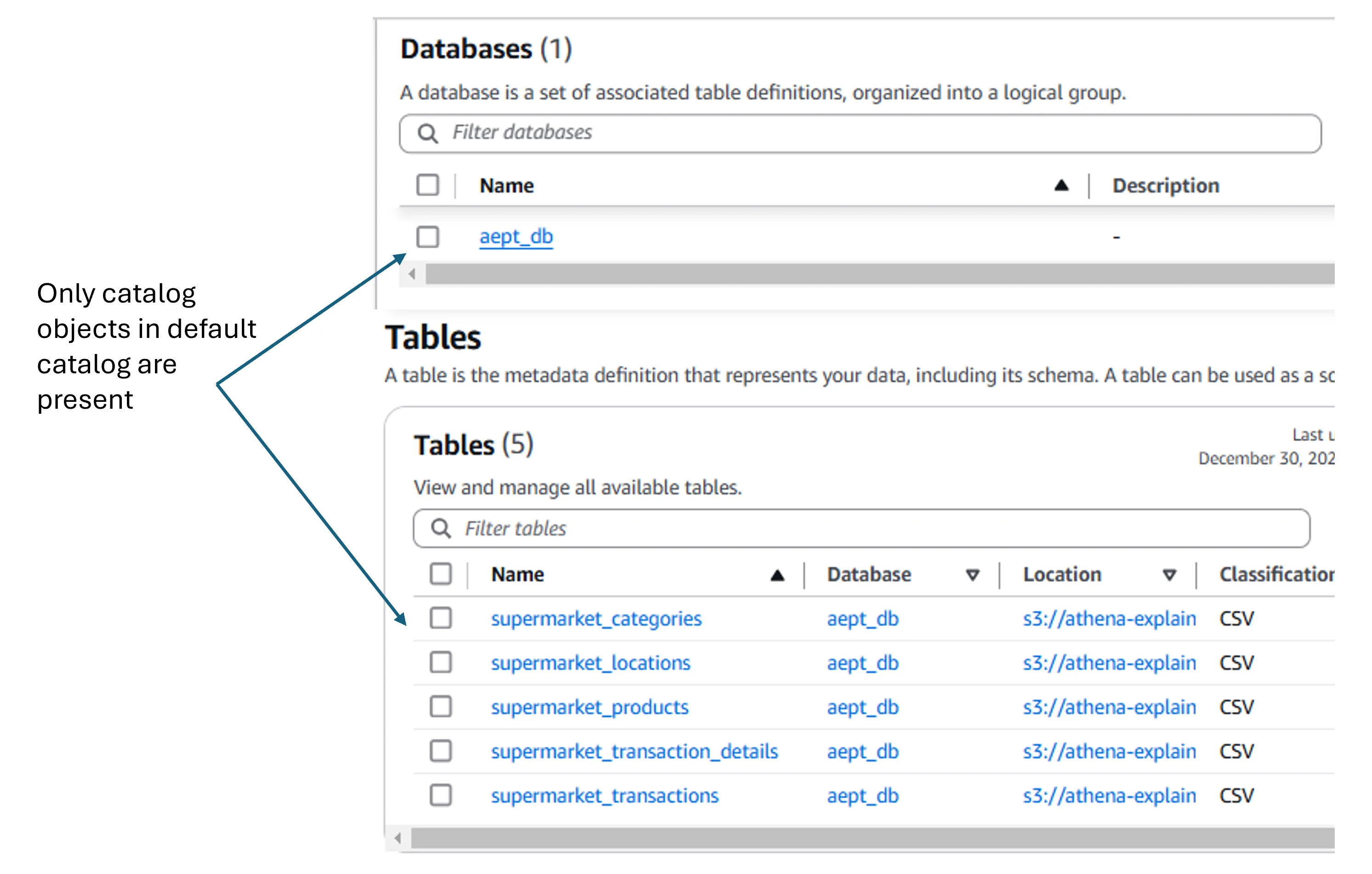

Interestingly, while AWS Lake Formation and Amazon Athena are clearly working together, none of the S3 Table components appear at all in AWS Glue under the Data Catalog, which displays only the default Catalog objects.

Querying an S3 Table

The hard part of an S3 Table is creating it in the first place, as it is currently only possible through Spark executed on an Amazon EMR cluster or via applications implementing the AWS Labs S3 Tables Catalog library. As far as I am aware, it is not possible to create an S3 Table - with columns - through Amazon Athena, Amazon Redshift or other query engines that don’t implement this library.

AWS Lake Formation Permissions

For this example, I created an S3 Table via EMR with the following components:

- S3 Table Bucket Name = my-s3-table-bucket

- Namespace = sandbox_ns

- S3 Table Name = transaction_details

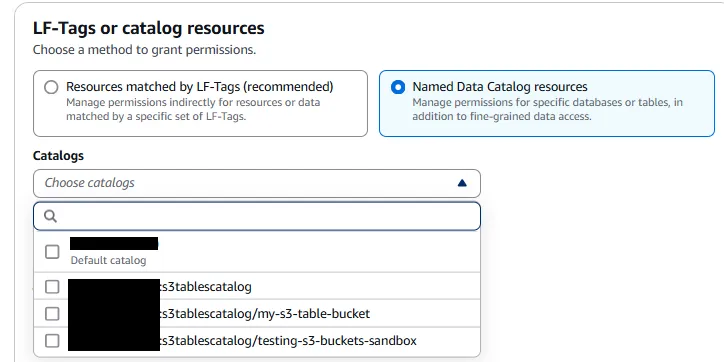

In order to query it through Amazon Athena, I had to grant my IAM user read permissions through Lake Formation, which I could do on the parent catalog s3tablescatalog or the sub-catalog for my corresponding S3 Table Bucket.

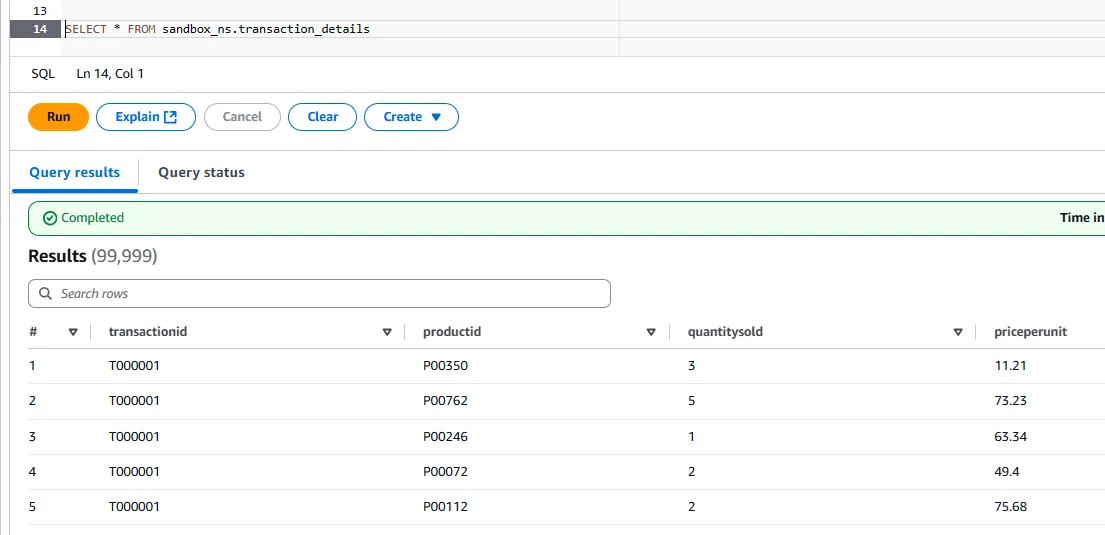

Once the permission was granted, I was able to query the table in the same way I would any other Athena table:

As an aside, notice that the query has this form:

SELECT * FROM {namespace}.{s3_table};

where again, namespace is treated as a Glue/Athena database.

Other SQL Operations

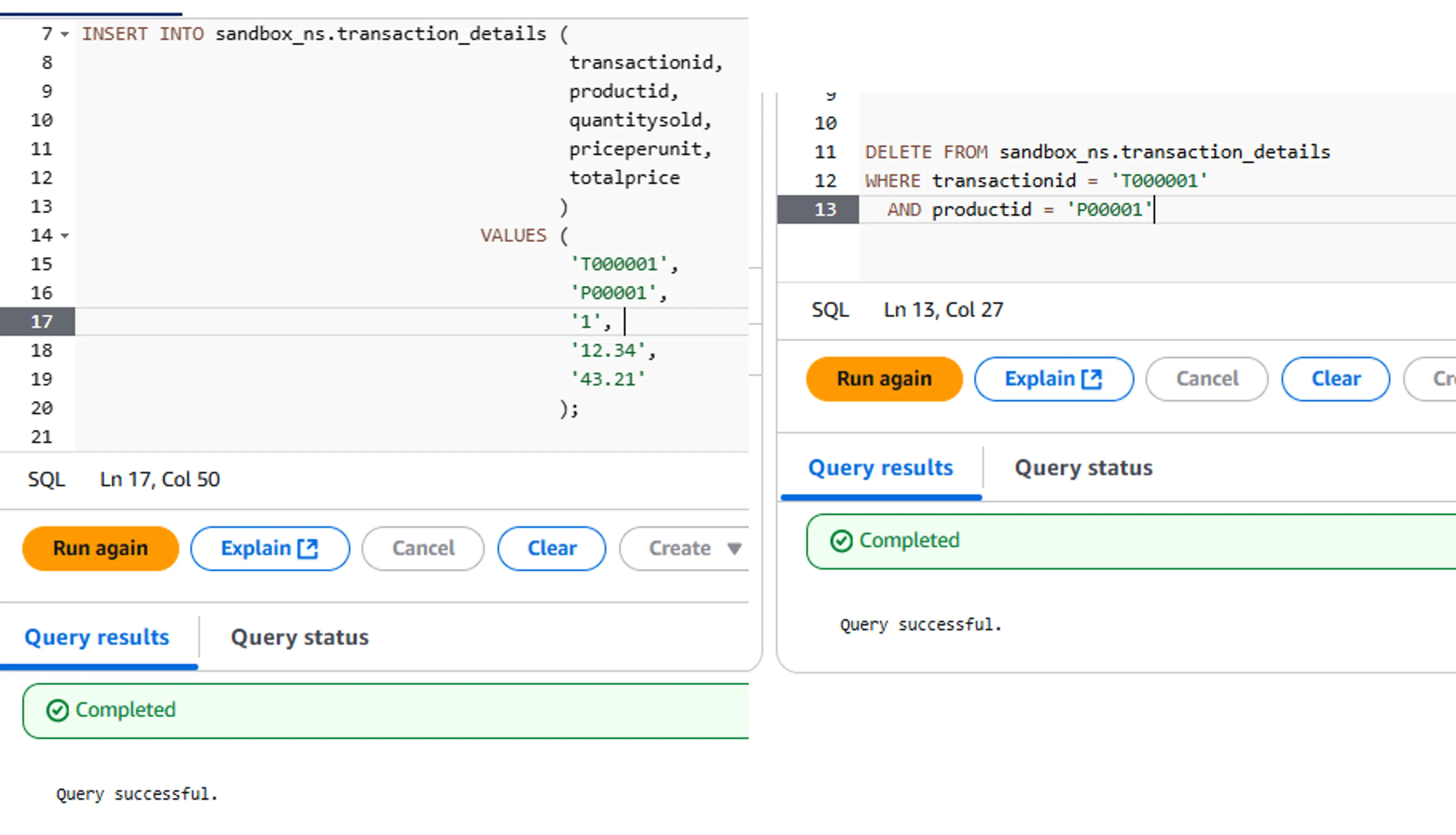

Having granted suitable permissions, inserts and deletes with the S3 Table behaved as expected without any problems:

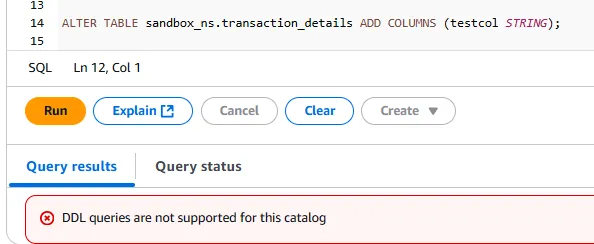

On the other hand, DDL operations appear not to be supported through Amazon Athena, so changes to table schemas currently would still need to be achieved through Amazon EMR:

Iceberg Table Metadata

One of the biggest advantages of S3 Tables is its automated maintenance, which can be troublesome to do manually if you have a lot of self-managed tables. Unfortunately, the advantage is a double-edged sword.

When Iceberg tables are self-managed, users have access to the underlying metadata showing all of the files, manifests, partitions and snapshots that constitute a given table. This is useful because it can help identify skew within a given partition that might be affecting the performance of reads and writes to that table.

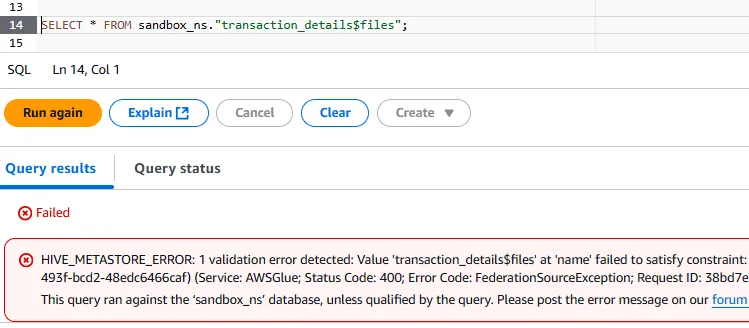

With S3 Tables, however, these metadata are not provided:

Despite AWS handling the optimization of S3 Tables, the lack of transparency into skew, number of partitions etc could mean a table is improperly partitioned without the user knowing, resulting in slower reads and increased cost and query time. I would hope that in the future, AWS will provide a dashboard showing some of these metadata and partition statistics, perhaps even with recommendations for new partitioning. Time will tell.

AWS CLI, SDK and REST API Commands

Putting Amazon EMR to one side, AWS has provided a number of CLI, SDK (e.g. Boto3) and S3 REST API commands to interact with S3 Tables.

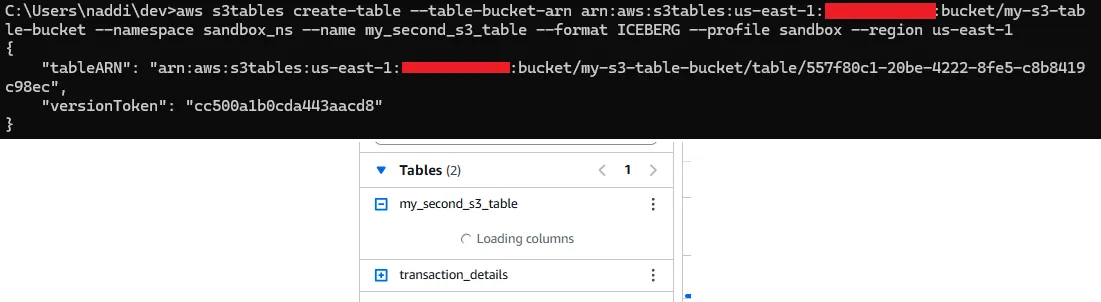

At the moment, you won’t get far using these commands alone. For example, you can easily create an S3 Table Bucket and Namespace using the create-table-bucket and create-namespace commands. However, if you then try to create a table using the create-table command, it will create a table entry but you can’t add any columns to it. Athena will perpetually try to load columns that do not exist.

Earlier, we also showed that Athena (and likely other query engines) cannot add new columns or otherwise alter the table schema. As a result, instead of using these commands, you are probably better off just using Amazon EMR until S3 Tables is developed further.

Table Maintenance Configuration

Automated Table Maintenance (compaction and snapshot management) can be toggled on and off through the put-table-maintenance-configuration command, providing one of the following settings:

- icebergCompaction

- targetFileSizeMB - sets the target size of Iceberg data files after compaction

- icebergSnapshotManagement

- minSnapshotsToKeep - the minimum number of Iceberg snapshots (collection of metadata and data files) to keep prior to the current snapshot

- maxSnapshotAgeHours - the maximum age in hours to retain a snapshot before it is deleted

For more details about setting the table maintenance configuration, please see the AWS documentation here.

Pricing

The costs of S3 Tables can be found in the Tables section of the S3 Pricing page.

Storage is slightly more expensive for an S3 Table ($0.0265/GB) over S3 Standard ($0.023/GB) in a General Purpose bucket, but PUT and GET requests cost the same in each bucket type.

AWS provides an example of costs for a given table: a compacted 1TB S3 Table with an average object size of 100MB would cost $35/month. The main bulk of these costs is the ‘S3 Tables storage charge’ at $27.14 and an ‘S3 Tables compaction - data processed charge’ at $7.32. This doesn’t sound too bad, but remember that this is just 1 table, and your organization may have 100 such tables - that cost could really mount up.

It is also unclear whether the compaction costs could be reduced in the future. The table maintenance configuration does not appear to allow WHERE clause predicates to help reduce the data being compacted. Instead, compaction appears to occur across the entire dataset every time, which could be 100s of GBs. I expect we will see improvements to this in the future as the service matures.

Conclusion

S3 Tables is not quite up to the stage where it can be used in a Production environment, just yet. In fairness, it is in Preview Release at time of writing, so it is probably best to just experiment with them at this stage to evaluate their future potential.

In order for the feature to reach a Production-ready level I think query engines such as Redshift or Athena should be able to create and alter these tables, and more transparency over the partitioning structures and other metadata of the underlying Iceberg table is needed. Nevertheless, I’m sure that over 2025 this will be an area of active development, and we will see S3 Tables start to overtake their self-managed counterparts.

References

[1] - Amazon Web Services. The AWS Glue Data Catalog now supports storage optimization of Apache Iceberg tables. https://aws.amazon.com/blogs/big-data/the-aws-glue-data-catalog-now-supports-storage-optimization-of-apache-iceberg-tables/

[2] - Amazon Web Services. Amazon S3 Tables. https://aws.amazon.com/s3/features/tables/

[3] - Amazon Web Services Documentation. Working with Amazon S3 Tables and table buckets.

https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables.html

[4] - Amazon Web Services Documentation. Querying Amazon S3 tables with Apache Spark.

https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-integrating-open-source-spark.html

[5] - Amazon Web Services Documentation. Streaming data to tables with Amazon Data Firehose. https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-integrating-firehose.html

[6] - Amazon Web Services Documentation. Create a namespace. https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-namespace-create.html

[7] - Amazon Web Services Documentation. Tables. https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-tables.html

[8] - Amazon Web Services Documentation. S3 Tables maintenance. https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables-maintenance.html

[9] - Amazon Web Services Documentation. Amazon S3 table bucket maintenance https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-table-buckets-maintenance.html